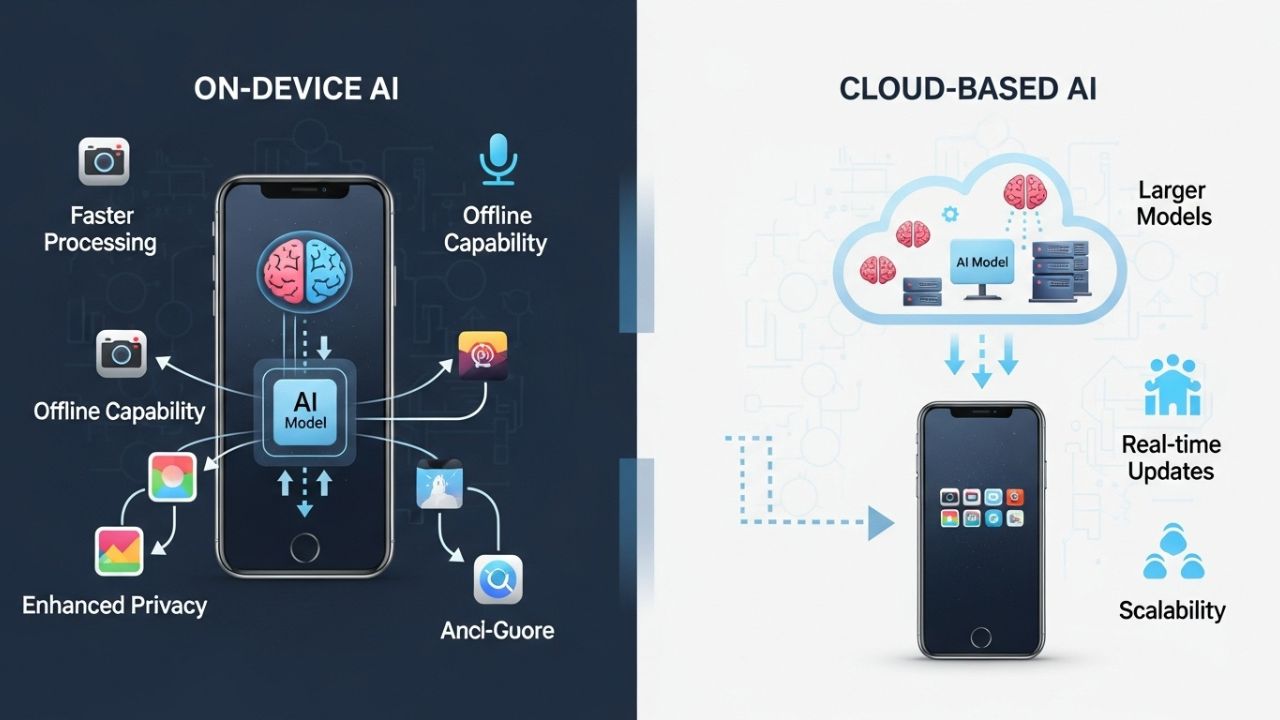

AI Differ from Cloud-Based AI in Mobile: Artificial intelligence is reshaping mobile apps, making them smarter and more intuitive for everyday users worldwide. In 2025, the choice between on-device AI and cloud-based AI determines everything from app speed to user privacy, with on-device processing gaining traction due to advanced smartphone hardware. On-device AI runs computations locally on the user’s device, offering instant responses without internet reliance, while cloud-based AI leverages remote servers for heavier lifting but requires connectivity. This distinction is crucial for developers aiming to balance performance, security, and scalability in apps ranging from fitness trackers to social media platforms.

As mobile usage surges in regions like India, where reliable internet isn’t always guaranteed, understanding these differences helps users appreciate why some features feel seamless while others lag. On-device AI processes data on the spot, preserving privacy by keeping sensitive information local, whereas cloud AI excels in handling vast datasets for personalized experiences. By exploring these approaches, readers can grasp how AI is evolving mobile technology to fit real-world needs, from offline navigation to real-time translation.

Understanding On-Device AI in Mobile Apps

On-device AI refers to artificial intelligence models that execute directly on the smartphone’s hardware, such as neural processing units (NPUs) in chips like Qualcomm’s Snapdragon or Apple’s A-series processors. These models use optimized, lightweight algorithms trained to perform tasks like image recognition or voice commands without sending data off the device. This local processing enables apps to deliver quick, responsive features, ideal for scenarios where every millisecond counts, such as augmented reality filters in social apps.

The technology relies on advancements in model compression techniques, like quantization, which reduce AI models’ size while maintaining accuracy, allowing them to fit within a phone’s limited RAM and storage. For instance, Google’s Gemini Nano runs entirely on-device for tasks like summarizing text in messaging apps, ensuring functionality even in remote areas with spotty signals. This approach not only boosts efficiency but also aligns with growing privacy regulations by minimizing data transmission.

In 2025, on-device AI is becoming standard in budget and mid-range phones, thanks to affordable hardware from manufacturers like MediaTek, democratizing access to intelligent features. Developers integrate it via frameworks like Core ML for iOS or TensorFlow Lite for Android, enabling seamless embedding without overhauling app architecture. Overall, on-device AI transforms mobile apps into self-sufficient tools that prioritize user control and immediacy.

Read Also: What Are Common Mistakes When Using AI Tools?

The Mechanics of Cloud-Based AI in Mobile Apps

Cloud-based AI, in contrast, offloads computations to remote servers hosted by providers like AWS or Google Cloud, where apps send queries and receive processed results. This method harnesses massive computational resources, including GPUs and specialized AI accelerators, to tackle complex tasks that exceed mobile hardware limits. For example, when using a translation app like Google Translate for nuanced, context-aware rendering of entire paragraphs, the cloud processes the input against enormous language datasets.

The workflow involves API calls from the app to cloud endpoints, where models like large language models (LLMs) analyze data and return outputs, often with real-time updates to improve accuracy over time. This scalability allows apps to handle peak loads, such as personalized recommendations in e-commerce platforms during sales events. However, it demands stable internet, introducing potential delays in regions with variable connectivity, like rural Tamil Nadu.

Cloud AI thrives on continuous learning from aggregated, anonymized data across users, enabling features like fraud detection in banking apps that evolve with new threat patterns. Integration is straightforward through services like Azure AI, but it requires careful data handling to comply with standards like GDPR. In essence, cloud-based AI turns mobile apps into gateways to expansive intelligence, though at the cost of dependency on external infrastructure.

Key Differences: Performance, Privacy, and Accessibility

The core differences between on-device and cloud-based AI lie in their operational environments, profoundly impacting app performance and user trust. On-device AI delivers ultra-low latency, often under 100 milliseconds for tasks like facial unlocking, as processing occurs instantly without network hops. Cloud AI, while powerful, introduces delays of 200-500 milliseconds or more, depending on bandwidth, making it less suitable for time-sensitive interactions like live video editing.

Privacy stands out as another stark contrast: on-device AI keeps all data—such as health metrics in fitness apps—local, reducing breach risks and eliminating the need for user consent on data sharing. Cloud-based systems transmit data to servers, raising concerns about surveillance or hacks, though encryption and edge computing mitigate some issues. For users in privacy-focused markets, on-device options build confidence by design.

Accessibility also varies; on-device AI shines offline, powering navigation apps like offline Google Maps in areas without signal. Cloud AI falters without connectivity, limiting its reach in developing regions, but offers broader scalability for global apps. Battery impact differs too: on-device tasks can drain power faster on older devices, while cloud offloading conserves local resources but increases data costs. These trade-offs guide developers in choosing the right fit for specific app functions.

| Aspect | On-Device AI | Cloud-Based AI |

| Latency | Ultra-low, real-time | Variable, internet-dependent |

| Privacy | High, data stays local | Moderate, data transmitted |

| Offline Capability | Full support | Limited or none |

| Computational Power | Device-limited | Virtually unlimited |

| Cost for Developers | Lower bandwidth, higher optimization | Server fees, scalable pricing |

| Model Updates | App updates required | Instant, over-the-air |

This table highlights how on-device AI prioritizes immediacy and security, while cloud AI emphasizes depth and flexibility.

Pros and Cons of On-Device AI

On-device AI’s primary advantage is its independence, allowing apps to function robustly in diverse environments, from urban commutes to remote hikes. It enhances user engagement through snappy responses, as seen in keyboard apps predicting text with minimal delay. Energy efficiency improves with hardware-specific optimizations, like Apple’s Neural Engine handling AI without taxing the CPU.

Yet, challenges include hardware constraints; older phones may struggle with larger models, leading to slower performance or skipped features. Development demands expertise in model pruning to fit AI within 1-2 GB limits, increasing time to market. Scalability is tricky, as updates require pushing to millions of devices individually. Despite these, the privacy edge makes it indispensable for health and finance apps.

In 2025, tools like MediaPipe from Google simplify integration, letting developers prototype on-device features quickly. For content creators, this means apps that edit videos locally without uploading to servers, streamlining workflows.

Pros and Cons of Cloud-Based AI

Cloud-based AI unlocks unparalleled sophistication, enabling apps to process petabytes of data for insights like sentiment analysis in customer service chatbots. It scales effortlessly, supporting millions of users during viral trends without device upgrades. Frequent model retraining keeps features cutting-edge, as with Netflix’s recommendation engine adapting to viewing habits in real-time.

Drawbacks include connectivity reliance, which can frustrate users in low-bandwidth areas, potentially increasing churn rates. Privacy risks persist, even with anonymization, prompting scrutiny from regulators. Ongoing server costs can balloon for high-traffic apps, and latency spikes during peak hours degrade experiences. Still, hybrid models blending both approaches are rising, using on-device for basics and cloud for depth.

For global apps, cloud AI facilitates multilingual support, translating content on-the-fly for diverse audiences. This versatility positions it as a backbone for enterprise solutions.

Real-World Examples in Mobile Apps

Samsung’s Galaxy AI suite demonstrates on-device prowess, with features like Live Translate processing calls locally for instant, private subtitles. In the Notes app, AI summarizes voice memos on-device, ensuring confidentiality for business users. Apple’s Siri enhancements in iOS 18 use on-device models for routine queries, only escalating to cloud for complex ones.

Cloud-based examples abound in social apps; Instagram’s recommendation algorithm analyzes cloud-stored data to curate feeds, drawing from billions of posts. Duolingo employs cloud AI for adaptive learning paths, adjusting lessons based on global user trends. In India, apps like Paytm use cloud AI for fraud detection, scanning transaction patterns against vast historical data.

Hybrid implementations shine in fitness apps like Strava, where on-device GPS tracking handles real-time metrics, but cloud uploads enable community challenges. Google’s Photos app uses on-device for quick edits and cloud for advanced searches across libraries. These cases illustrate how blending approaches maximizes benefits.

In gaming, on-device AI powers AR elements in Pokémon GO for smooth creature overlays, while cloud handles multiplayer matchmaking. For content writers, apps like Grammarly process basic checks on-device and deep plagiarism scans via cloud. By 2025, 60% of top apps adopt hybrids, optimizing for both speed and power.

The Rise of Hybrid AI Approaches

Hybrid AI combines on-device and cloud processing, routing simple tasks locally and complex ones to servers, offering a best-of-both-worlds solution. This model minimizes latency for core functions while leveraging cloud scalability for enhancements, as in WhatsApp’s AI stickers generated on-device but refined via cloud libraries. Developers use edge computing to decide routes dynamically based on connectivity and task complexity.

Benefits include balanced privacy—sensitive data stays local—and cost efficiency, reducing unnecessary cloud calls. Challenges involve seamless handover logic to avoid jarring shifts in performance. In 2025, frameworks like Android’s AICore support hybrids, enabling apps to adapt fluidly. For users in variable networks, this ensures reliability, like in navigation apps switching modes mid-trip.

Future trends point to more intelligent routing, where AI itself decides processing paths, further blurring lines between the two. This evolution suits diverse needs, from personal productivity to enterprise analytics.

Implications for Developers and Users in 2025

For developers, choosing between on-device and cloud AI influences app architecture, with on-device demanding hardware-aware coding and cloud focusing on API reliability. Budgets factor in: on-device cuts long-term data fees, but cloud subscriptions scale with usage. Testing across devices is vital for on-device, ensuring compatibility with varying NPUs.

Users gain from on-device for privacy-centric apps, like those tracking personal finances, while cloud enhances discovery in entertainment platforms. In India, where data costs matter, on-device adoption is surging, with 70% of new apps incorporating it. Ethical AI practices, like transparent data use, build trust across both.

As hardware evolves, on-device AI will handle more tasks, potentially reducing cloud dependency by 40% by 2026. This shift empowers users with faster, safer apps tailored to their lifestyles.

Read Also: Best AI Tools for Students, Freelancers, and Entrepreneurs in 2025

Frequently Asked Questions

What is the biggest advantage of on-device AI over cloud-based AI?

On-device AI provides superior privacy and low latency by processing data locally, ideal for offline or sensitive applications without internet dependency.

Can cloud-based AI work without an internet connection?

No, cloud-based AI requires stable connectivity to send data to servers and receive results, though some apps cache responses for limited offline use.

Is on-device AI available on all smartphones?

It’s increasingly common in 2025 models with dedicated AI chips, but older devices may lack the hardware for full functionality.

How do hybrid AI models benefit mobile apps?

Hybrid models combine on-device speed for simple tasks with cloud power for complex ones, offering flexibility, better privacy, and cost savings.

Will on-device AI replace cloud-based AI entirely?

Unlikely; each serves unique needs, with hybrids becoming dominant for comprehensive app experiences in diverse scenarios.

Conclusion

On-device and cloud-based AI represent two pillars of mobile innovation, each excelling in ways that complement the other’s strengths to create richer app ecosystems. On-device AI delivers immediacy and security, transforming everyday interactions into seamless experiences, while cloud-based AI unlocks boundless potential for data-driven insights. As hybrids bridge the gap, 2025 marks a pivotal year where mobile apps become truly intelligent companions.

For developers and users alike, this duality means more choices tailored to context, from privacy-focused tools to globally scaled services. Embracing these technologies not only enhances functionality but also redefines accessibility in a connected yet intermittent world. Looking ahead, the fusion of both will drive even more personalized, efficient mobile futures.

1 thought on “How Does On-Device AI Differ from Cloud-Based AI in Mobile Apps?”